In my previous post, I showed how to build StreamInsight adapters that receive Azure AppFabric messages and send Azure AppFabric messages. In this post, we see how to use these adapters to push events into a cloud-hosted StreamInsight application and send events back out.

As a reminder, our final solution contains an on-premises consumer of an Azure AppFabric Service Bus endpoint and that event is relayed to StreamInsight Austin and output events are sent to an Azure AppFabric Service Bus endpoint that relays the event to an on-premises listener.

In order to follow along with this post, you would need to be part of the early adopter program for StreamInsight “Austin”. If not, no worries as you can at least see here how to build cloud-ready StreamInsight applications.

The StreamInsight “Austin” early adopter package contains a sample Visual Studio 2010 project which deploys an application to the cloud. I reused the portions of that solution which provisioned cloud instances and pushed components to the cloud. I changed that solution to use my own StreamInsight application components, but other than that, I made no significant changes to that project.

Let’s dig in. First, I logged into the Windows Azure Portal and found the Hosted Services section.

We need a certificate in order to manage our cloud instance. In this scenario, I am producing a certificate on my machine and sharing it with Windows Azure. In a command prompt, I navigated to a directory where I wanted my physical certificate dropped. I then executed the following command:

makecert -r -pe -a sha1 -n "CN=Windows Azure Authentication Certificate" -ss My -len 2048 -sp "Microsoft Enhanced RSA and AES Cryptographic Provider" -sy 24 testcert.cer

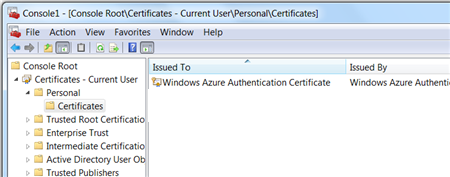

When this command completes, I have a certificate in my directory and see the certificate added to the “Current User” certificate store.

Next, while still in the Certificate Viewer, I exported this certificate (with the private key) out as a PFX. This file will be used with the Azure instance that gets generated by StreamInsight Austin. Back in the Windows Azure Portal, I navigated to the Management Certificates section and uploaded the CER file to the Azure subscription associated with StreamInsight Austin.

After this, I made sure that I had a “storage account” defined beneath my Windows Azure account. This account is used by StreamInsight Austin and deployment fails if no such account exists.

Finally, I had to create a hosting service underneath my Azure subscription. The window that pops up after clicking the New Hosted Service button on the ribbon lets you put a service under a subscription and define the deployment options and URL. Note that I’ve chosen the “do not deploy” option since I have no package to upload to this instance.

The last pre-deployment step is to associate the PFX certificate with this newly created Azure instance. When doing so, you must provide the password set when exporting the PFX file.

Next, I went to the Visual Studio solution provided with the StreamInsight Austin download. There are a series of projects in this solution and the ones that I leveraged helped with provisioning the instance, deploying the StreamInsight application, and deleting the provisioned instance. Note that there is a RESTful API for all of this and these Visual Studio projects just wrap up the operations into a C# API.

The provisioning project has a configuration file that must contain references to my specific Azure account. These settings include the SubscriptionId (GUID associated with my Azure subscription), HostedServiceName (matching the Azure service I created earlier), StorageAccountName (name of the storage account for the subscription), StorageAccountKey (giant value visible by clicking “View Access Keys” on the ribbon), ServiceManagementCertificateFilePath (location on local machine where PFX file sits), ServiceManagementCertificatePassword (password provided for PFX file), and ClientCertificatePassword (value used when the provisioning project creates a new certificate).

Next, I ran the provisioning project which created a new certificate and invoked the StreamInsight Austin provisioning API that puts the StreamInsight binaries into an Azure instance.

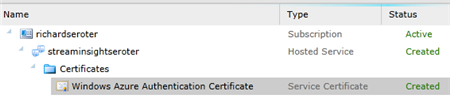

When the provisioning is complete, you can see the newly created instance and certificates.

Neat. It all completed in 5 or so minutes. Also note that the newly created certificate is in the “My User” certificate store.

I then switched to the “deployment” project provided by StreamInsight Austin. There are new components that get installed with StreamInsight Austin, including a Package class. The Package contains references to all of the components that must be uploaded to the Windows Azure instance in order for the query to run. In my case, I need the Azure AppFabric adapter, my “shared” component, and the Microsoft.ServiceBus.dll that the adapters use.

PKG.Package package = new PKG.Package("adapters");

package.AddResource(@"Seroter.AustinWalkthrough.SharedObjects.dll");

package.AddResource(@"Seroter.StreamInsight.AzureAppFabricAdapter.dll");

package.AddResource(@"Microsoft.ServiceBus.dll");

After updating the project to have the query from my previously built “onsite hosting” project, and updating the project’s configuration file to include the correct Azure instance URL and certificate password, I started up the deployment project.

You can see that my deployment is successful and my StreamInsight query was started. I can use the RESTful APIs provided by StreamInsight Austin to check on the status of my provisioned instance. By hitting a specific URL (https://azure.streaminsight.net/HostedServices/{serviceName}/Provisioning), I see the details.

With the query started, I turned on my Azure AppFabric listener service (who receives events from StreamInsight), and my service caller. The data should flow to the Azure AppFabric endpoint, through StreamInsight Austin, and back out to an Azure AppFabric endpoint.

Content that everything works, and scared that I’d incur runaway hosting charges, I ran the “delete” project with removed my Azure instance and all traces of the application.

All in all, it’s a fairly straightforward effort. Your onsite StreamInsight application transitions seamlessly to the cloud. As mentioned in the first post of the series, the big caveat is that you need event sources that are accessible by the cloud instance. I leveraged Windows Azure AppFabric to receive events, but you could also do a batch load from an internet-accessible database or file store.

When would you use StreamInsight Austin? I can think of a few scenarios that make sense:

- First and foremost, if you have a wide range of event sources, including cloud hosted ones, having your complex event processing engine close to the data and easily accessible is compelling.

- Second, Austin makes good sense for variable workloads. We can run the engine when we need to, and if it only operates on batch data, can shut it down when not in use. This scenario will even more compelling once the transparent and elastic scale out of StreamInsight Austin is in place.

- Third, we can use it for proof-of-concept scenarios without requiring on-premises hardware. By using a service instead of maintaining on-site hardware you offload your management and maintenance to StreamInsight Austin.

StreamInsight Austin is slated for a public CTP release later this year, so keep an eye out for more info.

Hello Mr Seroter,

Would it be possible to share this project too? I’ve followed part 1 and was able to get it running pretty easily. The sample application from Austin fails after two post requests (404) so I would like to know if you’ve had that error too and how you fixed it.

Having your project would be even better to gain some time and learn faster.

Thanks in advance!

Henry

Hi Henry. So the “test” application fails? Everything gets deployed, but you can’t actually prove that it works? There have been some breaking changes in Austin since I built this, so I’d doubt the original code would work either!

Hello Mr Seroter,

I’ve been able to get it running. There was one config missing in the documentation (or I missed it), concerning the connectionString for the Azure Tables. Once I entered the correct connectionstring, it all worked fine.

In the mean time I have added a Service Bus Queue sink which works perfectly too.

Thank you very much for your blog posts, they’ve helped me many times before!